Together with a friend I was going to move our servers from a virtual environment at a local ISP's site to our own hardware which would host our VPS'.

Fortunately we had a site where we could run our hardware for only 5€/month plus the energy costs. So we went to build a small, energy saving server which would be sufficient to host our small VPS' ourselves instead of spending money for having a company doing that.

At the end we had our own VPS host server which is a lot of fun, learned us a lot and is eventually cheaper than renting a VPS in the long run.

Last changes:

Content:

These were our aims with top priority first:

There were some additional requirements given by our use cases:

The first choice was made upon the CPU because it is an important decision and it was easy to do with the help of Intel's ARK website (very handy CPU database).

Of course we also considered purchasing AMD's processors but they made the job quite difficult by not offering an equivalent to Intel's ARK. However I quickly found out that they didn't offer any CPU comparable to that what we found made by Intel in terms of featureset or power consumption.

Processors from VIA or even non-x86 CPUs were not in question because they didn't offer the features we required.

To select our CPU I just made the following selection at the ARK database:

The resultset was quite short and by looking for the CPU with the lowest TDP I found the Intel Xeon 1220L with only 20W TDP.

Of course the TDP is not a perfect indicator for a processor's real average power consumption but it's perfectly clear that this CPU supported all our required features with a minimum of energy consuming components like a high number of CPU cores. Still it would be enough power to compete with our existing VPS' and it was by far the cheapest CPU out of the possible candidates.

The main memory was not a huge topic for us. The CPU supported DDR3-1333 with ECC so we looked for a cheap kit of 2×2GB memory of that type. For historical reasons we chose Kingston ValueRAM but any brand would do. As far as we know there is no significant difference in defect rate between different manufacturers. Also we didn't care about slight timing differences.

Before I compared the prices I had expected that barebones off the shelf were a quite cheap solution which would save us some headache. Buying a package of several products is often cheaper than the sum of all individual items' prices.

But as I was comparing the features of some Asus, Supermicro and Tyan barebones I just realized that Supermicro offered all the components of their barebone computers as seperate parts. To my surprise building the same system with the parts instead of purchasing the ready-made barebone would save us about 200€. Therefore we stopped considering buying a barebone.

I always loved Intel's mainboards for Intel's processors because they worked absolute flawlessly. They had some drawbacks like higher price and the quite limited BIOS options but that had always been acceptable for me.

So when looking for a nice motherboard to host our 1220L Xeon I had a look for Intel 1155 boards. Quickly I found the S1200BTx series with the models S1200BTL (ATX, large) and S1200BTS (µATX, small). At that time I planned to purchase a huge 4U case (see below) and considered the S1200BTL. However, due to the lower price I preliminary chose the smaller S1200BTS in the first place.

Later this decision was brought into question, see part II.

Before the project I had expected that some generic 19″ with 3 or 4U were the simplest and cheapest solution. The case could be designed following the generic ATX specs and would apply to all kinds of motherboards. Additionally I thought the cooling would be no problem because there was enough free space with air to spread the heat.

In contrary to my former belief I found that new cases in that size were quite expensive. I think that is because of the high price for metal in larger quantities. I tried to find some used ones in the expected quality for free with no success. Bidding on used cases with unknown quality and condition e.g. at eBay seemed too risky to me. I didn't like eBay anymore anyway (e.g. because of Paypal).

For small systems a small case is really better because the larger the case the more fans are inside. Also the larger cases were even more expensive like said above. We ended up looking for a small 1U case.

Supermicro offers a wide range of different cases from 1U to 4U and towers. The 1U cases are quite cheap and provide reasonably good quality but it was really hard to find the right one for our project.

| Model | PSU | chassis fans | Remarks |

|---|---|---|---|

| SC502L-200B | 200W, fan contineously running | none | Update: insufficient for selected board! |

| SC502 -200B | 200W, 80+ gold certified | none | Update: insufficient for selected board! |

| SC503L-200B | 200W, fan contineously running | none | I/O ports at front |

| SC503 -200B | 200W, 80+ gold certified | none | I/O ports at front |

| SC510-203B | 200W, 80+ gold certified | two 40mm | |

| SC512-203B | 200W, 80+ gold certified | one 100mm | ATX capable → largest model |

The cases with a PSU not certified for 80+ gold efficiency level are valuable because their fan which is contineously running and might move away enough of the hot air to allow using a passive CPU cooler.

Of course we favorized the cases without active fans because they would consume additional power (at full speed 12W per fan according to Supermicro!). I got in contact with Supermicro and they told me that up to 45W the passive cooler would be sufficient in the SC502L-200B case. With the 20W Xeon that should be enough. This is why we eventually decided to purchase a case without a gold level PSU. I guess that the technique in the PSU is the same—it's just built not to stop its fan rotating what is why it wasn't certified for 80+. However, I have no proof for that.

Update: Later we had some problems with this case which forced us to send it back and switch to another one, see part II.

At first I had chosen the Intel S1200BTS but then Supermicro told me that the layout of the ATX I/O panel wasn't guaranteed to match with their cases. Keep in mind that the Supermicro cases do not have an interchangable ATX I/O panel.

After reading Supermicro's message I was a bit disappointed but then I had a look at their set of LGA1155 mainboards. They are quite good and actually quite similar to Intel's board design. Additionally they were a bit cheaper than Intel's product—so I was convinced.

| Model | Chipset | Network ports | Remarks |

|---|---|---|---|

| Intel S1200BTS | C202 | 2×LAN |

|

| X9SCL | C202 | 2×LAN | |

| X9SCL -F | C202 | 2×LAN, IPMI | |

| X9SCL+-F | C202 | 2×LAN, IPMI | two independent, thus redundant LAN controllers (source) |

| X9SCM | C204 | 2×LAN | 2×SATA-600 |

| X9SCM-F | C204 | 2×LAN, IPMI | 2×SATA-600 |

The label IPMI means there is an additional RJ45 jack which is dedicated to the management system integrated in the chipset.

The X9SCL+-F has two Intel 82574L LAN controllers which can work independently. That is different to all other implementations which have kind of a master and a slave chip. If the master chip dies, both network ports are dead. You could run a redundant network connection with the X9SCL+-F's network ports which can survive the event of a single controller chip dying.

You might think that sounds a bit paranoid and is a rare edge case. I had said that myself before I experienced exactly that problem with a server at work in 2011. Its network controller spoke to the operating system correctly but no frame on ISO network model level 3 or higher came through.

The Intel Xeon 1220L comes in a so called tray package which means that there is no cooler or fan included. I really like Intel's standard coolers but for a server CPU which might end in everything between tower and 1U servers it's a bit difficult to define a standard cooler.

So we needed to purchase an additional cooler for our 1U chassis. The main question was whether it should have a fan (active cooler) or not (passive). I thought a lot about that and then decided to write to Supermicro in order to get a profound answer. Fortunately they sent a comprehensive reply and wrote that their cases could move up to 45W heat away from the CPU even with the passive CPU cooler. So we were safe and sound to purchase a passive cooler.

| Model | Metal | Fan | Remarks |

|---|---|---|---|

| Supermicro SNK-P0046P | aluminium | none | |

| Dynatron K1 | aluminium | none | |

| Dynatron K2 | copper | 75mm 4000RPM | specially designed for Mini-ITX systems |

| Dynatron K129 | copper | none | |

| Dynatron K199 | copper | 80mm 5000RPM |

Because copper can transport heat 70% better than aluminium [aluminium: 235 W/(m·K), copper 400 W/(m·K)] we spent about 30% more money on the Dynatron K129 in favor of Supermicro's. Maybe this helps to get a bit more performance from the CPU's Turbo Mode.

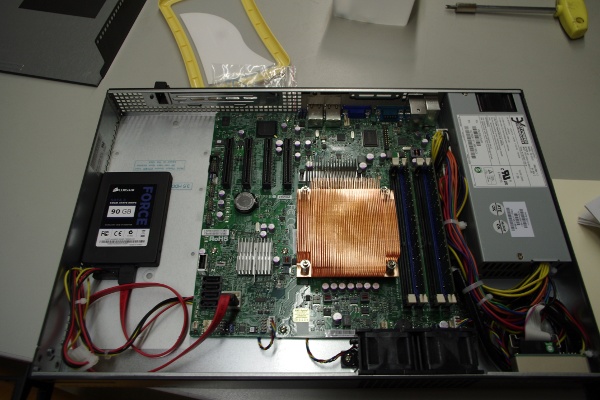

At last we needed to choose some form of mass storage to store the host operation system and our VPS disk images.

For several reasons we decided to purchase an SSD drive: First of all they consume much less power than traditional harddisks. Additionally they are robust and don't get harmed by the constant vibrations in a full 19″ rack.

After all we chose the largest disk that fit into the budget and that was manufactured by some reasonably proper manufacturer. Fortunately we could afford an SATA-600 device. So we also purchased the SATA-600 version of the mainboard which allows us some hardware upgrades in the future when even better SSDs are available, affordable and possibly necessary.

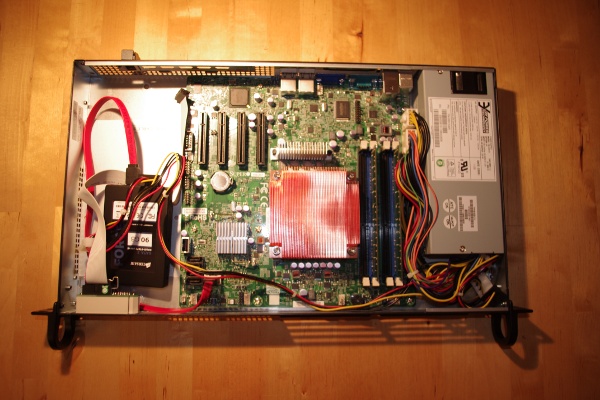

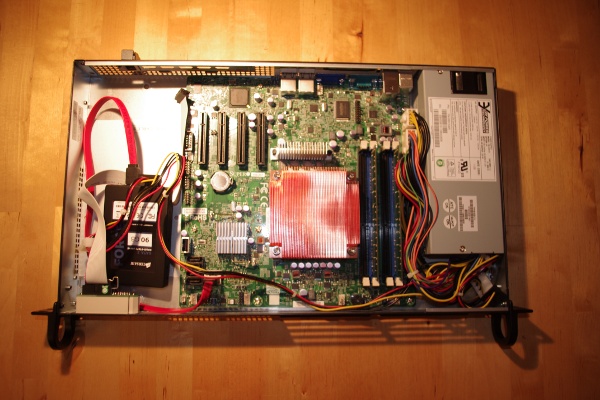

When all parts arrived I was eager to build up the system. Unfortunately it didn't work out because there were some problems with the case.

I got in contact with Supermicro to make clear that I didn't receive a wrong cable packaged with the board. We could verify that the cable was correctly shipped. The problem is that the original case SC502L-200B was designed to host Intel Atom based systems. Supermicro's boards for Atom CPUs have the front panel connector at the front side (the right side) of the board while the connector is at the opposite side for our Xeon board. This problem was silly and unnecessary because it wouldn't cost much for Supermicro to put a cable long enough for all types of boards into the packaging. Afterall it had been no problem to make a longer cable ourselves if there wasn't the next issue.

The 2nd problem was harder to solve: The passive CPU cooler just didn't transport enough heat to cool down the CPU. I tried compiling a recent Linux kernel. Within minutes (AFAIR while compiling the 2nd time) the system indicated overheating with a loud repetitive beeping.

So we switched to a different case with front panel fans, in our case the SC510L-200B. The selection was just made by availability at the shop we purchased from.

Fortunately the fans are very quiet and run at low speed when the system is idle. Because the 20W Xeon doesn't produce much heat at all and the fans are capable of transporting much more heat, the fans won't ever run very fast.

With the new, little larger, case came a significantly longer cable which allowed us to correctly connect the front panel without making a new cable ourselves.

Like said in the introduction we had the chance to host our server at a local site where we would only pay 5€ for connectivity plus the actual energy costs of 0.25€/kWh (0.30€/kWh with UPS backup).

In order to do a fair accounting we needed to purchase an energy cost meter which measured quite exactly. Fortunately the German online store for electronics Reichelt offered the KD 302 for only 9.90€ which is a cheap but exact instrument without any brand. I have no information about whether it is available from a different seller or in another country.

We decided to agree on some addition to the netto energy price for the benefit of UPS support for our equipment. That was to absorb the additional costs caused by the UPS running.

We plan to run our server for at least 3 years. In that time the savings for our hosting bills should nearly redeem the inital investment for the hardware.

The estimated costs were based on the assumption that our hardware would consume 50W at max.

| Item | Costs | Total |

|---|---|---|

| monthly costs | 2VPS'×36months×9.90€ | 712.80€ |

| Item | Costs | Total |

|---|---|---|

| initial investment | 617€ once | 617€ |

| energy costs | 36months×0.25€/kWh×50W | 328.50€ |

| addition for UPS support | 36months×0.05€/kWh×50W | 65.70€ |

| IP connectivity | 36months×5€ | 180€ |

| sum: | 1191.20€ | |

| Type | Total costs | Monthly costs per VPS |

|---|---|---|

| traditional | 712.80€ | 9.90€ |

| self hosted | 1191.20€ | 16.54€ |

Unforunately the estimated costs were significantly higher than intended but with some optimization it would be affordable for us. The fun with running your own physical server is priceless.

The selection of products we actually purchased depended on the assumptions described above and, of course, the availability of articles in the shops we ordered from.

| Component | Model | Price as of 2012-02-22 |

|---|---|---|

| case |

Supermicro SC510L-200B |

85€ |

| mainboard | Supermicro X9SCM-F | 170€ |

| CPU | Intel Xeon 1220L | 172€ |

| CPU cooler | Dynatron K129 | 27€ |

| RAM | Kingston 2×2GB DDR3-1333 ECC | 38€ |

| SSD | 90GB Corsair Force 3 Series | 105€ |

| energy cost meter | KD 302 | 10€ |

| Shipping | multiple shops | 10€ |

| sum: | 617€ |

|

All in all I was very happy having designed a system which cost about 600€ in total. This was about 200€ lower than what I had expected after doing a first rough calculation with a barebone in mind.

To our surprise the power efficiency of the built system even exceeded our assumptions. During boot it would consume about 30W and when all power saving features were turned on and the system went idle the usage would decrease to 23W.

| Power consumption | Monthly costs per VPS |

|---|---|

| 50W estimated | 16.54€ |

| 23W measured | 13.59€ |

After the 64th month (in the 6th year) the costs for hardware and datacenter would equal the costs for traditional VPS' in the same time.